8.5 KiB

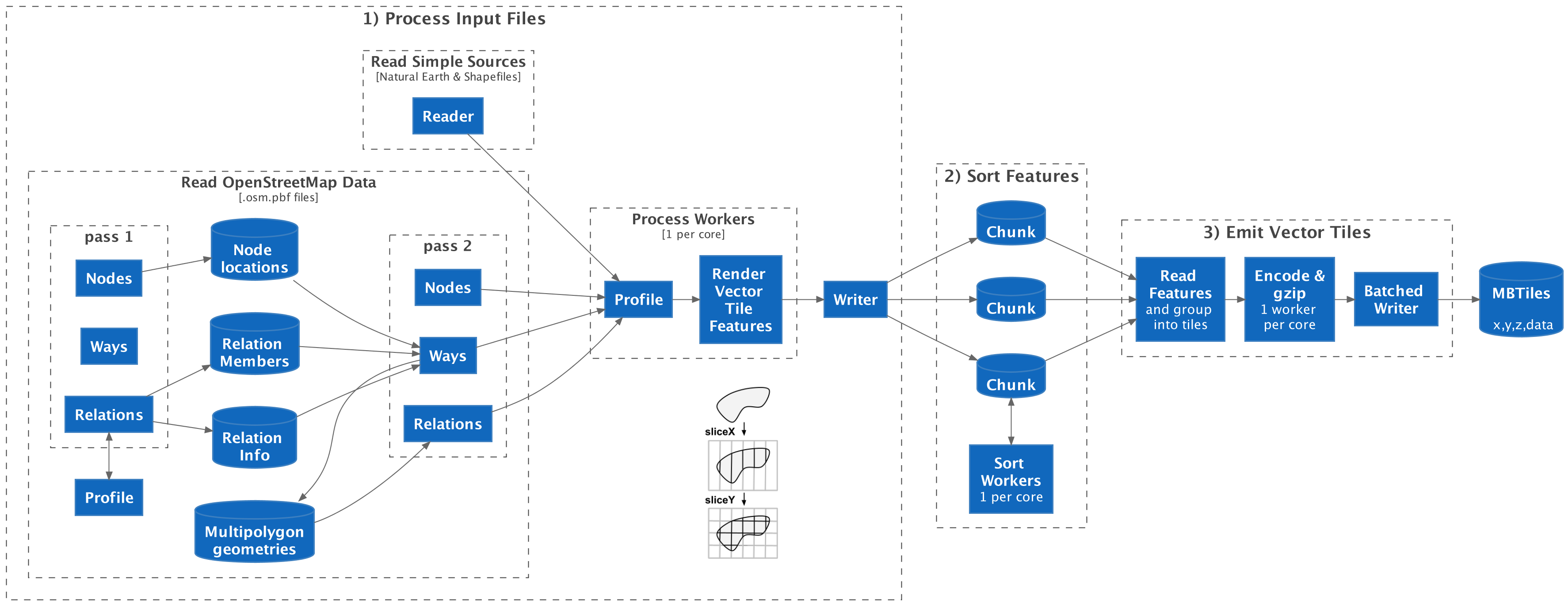

Planetiler Architecture

Planetiler builds a map in 3 phases:

- Process Input Files according to the Profile and write vector tile features to intermediate files on disk

- Sort Features by tile ID

- Emit Vector Tiles by iterating through sorted features to group by tile ID, encoding, and writing to the output tile archive

User-defined profiles customize the behavior of each part of this pipeline.

1) Process Input Files

First, Planetiler reads SourceFeatures from each input source:

- For "simple sources" NaturalEarthReader or ShapefileReader can get the latitude/longitude geometry directly from each feature

- For OpenStreetMap

.osm.pbffiles, OsmReader needs to make 2 passes through the input file to construct feature geometries:- pass 1:

- nodes: store node latitude/longitude locations in-memory or on disk using LongLongMap

- ways: nothing

- relations: call

preprocessOsmRelationon the profile and store information returned for each relation of interest, along with relation member IDs in-memory using a LongLongMultimap.

- pass 2:

- nodes: emit a point source feature

- ways:

- lookup the latitude/longitude for each node ID to get the way geometry and relations that the way is contained in

- emit a source feature with the reconstructed geometry which can either be a line or polygon, depending on

the

areatag and whether the way is closed - if this way is part of a multipolygon, also save the way geometry in-memory for later in a LongLongMultimap

- relations: for any multipolygon relation, fetch the member geometries and attempt to reconstruct the multipolygon geometry using OsmMultipolygon, then emit a polygon source feature with the reconstructed geometry if successful

- pass 1:

Then, for each SourceFeature, generate vector tile features according to the profile in a worker thread (default 1 per core):

- Call

processFeaturemethod on the profile for each source feature - For every vector tile feature added to

the FeatureCollector:

- Call FeatureRenderer#accept

which for each zoom level the feature appears in:

- Scale the geometry to that zoom level

- Simplify it in screen pixel coordinates

- Use TiledGeometry

to slice the geometry into subcomponents that appear in every tile it touches using the stripe clipping algorithm

derived from geojson-vt:

sliceXsplits the geometry into vertical slices for each "column" representing the X coordinate of a vector tilesliceYsplits each "column" into "rows" representing the Y coordinate of a vector tile- Uses an IntRangeSet to optimize processing for large filled areas (like oceans)

- If any features wrapped past -180 or 180 degrees longitude, repeat with a 360 or -360 degree offset

- Reassemble each vector tile geometry and round to tile precision (4096x4096)

- For polygons, GeoUtils#snapAndFixPolygon uses JTS utilities to fix any topology errors (i.e. self-intersections) introduced by rounding. This is very expensive, but necessary since clients like MapLibre GL JS produce rendering artifacts for invalid polygons.

- Encode the feature into compact binary format

using FeatureGroup#newRenderedFeatureEncoder

consisting of a sortable 64-bit

longkey (zoom, x, y, layer, sort order) and a binary value encoded using MessagePack (feature group/limit, feature ID, geometry type, tags, geometry) - Add the encoded feature to a WorkQueue

- Call FeatureRenderer#accept

which for each zoom level the feature appears in:

Finally, a single-threaded writer reads encoded features off of the work queue and writes them to disk using ExternalMergeSort#add

- Write features to a "chunk" file until that file hits a size limit (i.e. 1GB) then start writing to a new file

2) Sort Features

ExternalMergeSort sorts all of the intermediate features using a worker thread per core:

- Read each "chunk" file into memory

- Sort the features it contains by 64-bit

longkey - Write the chunk back to disk

3) Emit Vector Tiles

TileArchiveWriter is the main driver. First, a single-threaded reader reads features from disk:

- ExternalMergeSort emits sorted features by doing a k-way merge using a priority queue of iterators reading from each sorted chunk

- FeatureGroup collects

consecutive features in the same tile into a

TileFeaturesinstance, dropping features in the same group over the grouping limit to limit point label density - Then TileArchiveWriter groups tiles into variable-sized batches for workers to process (complex tiles get their own batch to ensure workers stay busy while the writer thread waits for finished tiles in order)

Then, process tile batches in worker threads (default 1 per core):

- For each tile in the batch, first check to see if it has the same contents as the previous tile to avoid re-encoding the same thing for large filled areas (i.e. oceans)

- Encode using VectorTile

- gzip each encoded tile

- Pass the batch of encoded vector tiles to the writer thread

Finally, a single-threaded writer writes encoded vector tiles to the output archive format:

- For MBTiles, create the largest prepared statement supported by SQLite (999 parameters)

- Iterate through finished vector tile batches until the prepared statement is full, flush to disk, then repeat

- Then flush any remaining tiles at the end

Profiles

To customize the behavior of this pipeline, custom profiles implement the Profile interface to override:

- what vector tile features to generate from an input feature

- what information from OpenStreetMap relations we need to save for later use

- how to post-process vector features grouped into a tile before emitting

A Java project can implement this interface and add arbitrarily complex processing when overriding the methods. The custommap project defines a ConfiguredProfile implementation that loads instructions from a YAML config file to dynamically control how schemas are generated without needing to write or compile Java code.